- Zenity Labs

- Posts

- Clawdbot: More than you bargained for?

Clawdbot: More than you bargained for?

Why Clawdbot and why now?

In the past few months, we've been witnessing a fast growth of AI personal assistants and automation frameworks. One of the most interesting ones is the PAI (short for Personal AI Assistant), authored by Daniel Miessler and open-sourced for the public to participate in.

The early versions of PAI required quite a bit of tweaking in order to get them to work fully, in a way customized for you. But as Gal Malka, VP of Engineering at Zenity, says: "If there is too much friction, people are not going to go for it." While recent versions of PAI (v2.4.0 at the time of writing this) have improved the installation process and have simplified it, it is still perceived as a "for technical users" product.

On the other hand, there’s Clawdbot. Initially released in November of 2025, has become one of the fastest-growing AI personal assistants in the last couple of months. Marketed as a “local, always-on AI assistant” that can handle emails, calendars, file automation, messaging workflows (WhatsApp, Telegram, Discord, Slack, etc.), and even financial automation, it is generating excitement across hobbyist, developer, and professional audiences.

Watching YouTube reviews, one will hear quotes such as "I have seen the future and it is right here, on this MacMini… This is the best technology I've ever used in my life." (Alex Finn 284K views) or "Clawdbot is taking the world by storm right now and it is absolutely blowing my mind as well as the minds of everybody that's using it." (Matt Wolfe ~49K views). Community highlights like “I installed it yesterday… and already everything is automated” and “runs 24/7 on a $5/month server” reflect how quick and simple the experience feels for many users.

Another part of the appeal stems from the fact that Clawdbot is designed to run locally on your machine (or server platform, if you insist), supposedly giving users control over their data flow and workflow automation without needing to trust external servers.

At this point, it's clear that Clawdbot is an easy sell. But let's see what else is included inside the box.

The Caveats

Installing a persistent server on your machine

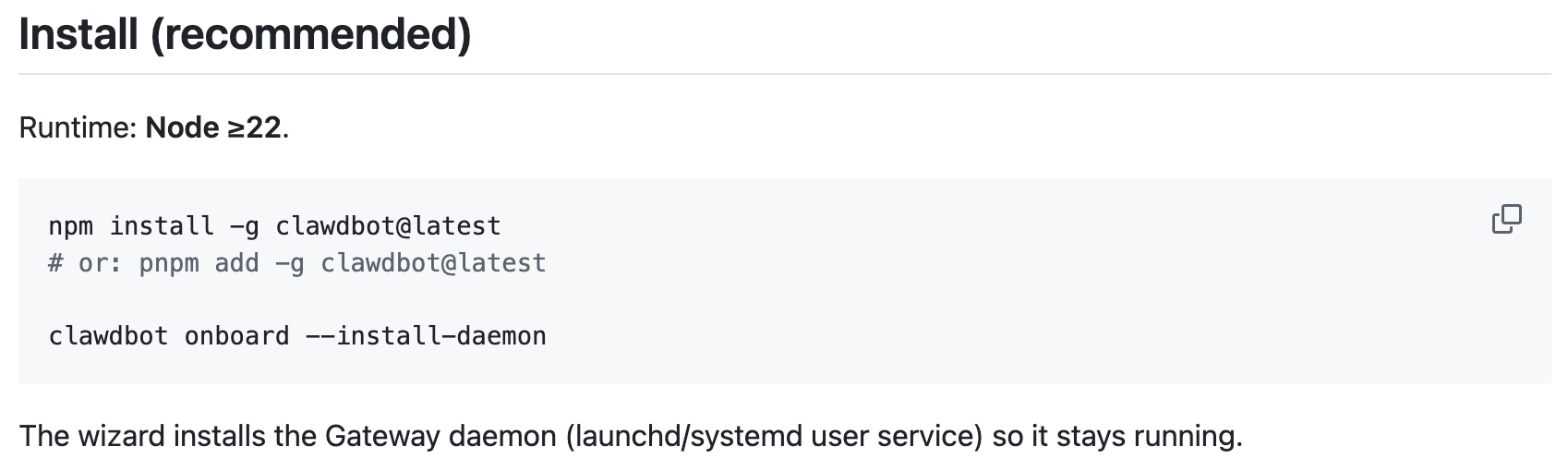

One of the standout aspects of Clawdbot’s recent adoption is the ease with which it installs:

But herein lies the risk:

"The wizard installs the Gateway daemon (launchd/systemd user service) so it stays running."

The script installs a Gateway - a server accepting requests over IP - and it stays running, meaning it's persistent. And while security-savvy users will notice this line and understand its inherent risks, most users won't do either.

This is not theoretical. Shodan users across the internet have already reported hundreds of Clawdbot gateways popping up, most of them unsecured (see for yourself here). Moreover, Clawdbot users are already reporting being scanned and attacked: "This is insane we were attacked 7,922 times over the weekend after using Clawdbot." (Shruti Gandhi).

Running with high permissions

Clawdbot runs locally with high permissions, enjoying access to your files, folders, and calendars; system commands when plugins are enabled; messaging platforms and third-party integrations when configured.

Clawdbot’s AI agents can - if configured loosely (read: configured using defaults or recommendations) - read, write, execute, and communicate with external services. Without any sandboxing or permissions control by default, these capabilities could be misused or exploited, whether by misconfiguration or by sophisticated attacks.

This concern is not hypothetical: Even the official security documentation warns that running an AI agent with shell access on your machine is “spicy” and that users must pay special attention to authentication policies, access allowlists, and file permissions. You can imagine how many of those users actually pay attention to the warning, and how many of them simply go ahead with the installation.

The default preferences might not be safe for production or personal data handling without tightening. This implies most casual installs will lack robust protection unless the user intentionally hardens the environment.

The Corporate Risk

So far we’ve talked about individual adopters. But the second major risk is the corporate one.

Because Clawdbot installs locally and functions as an endpoint, it bypasses traditional SaaS posture or monitoring tools entirely. In addition, it's hard for IT teams to keep track of everything users install on their boxes, and few products offer that kind of central visibility. This makes Clawdbot a classic “shadow IT” problem. This also draws parallels to the rapid MCP adoption, with the end of 2025 seeing many examples of shadow MCP implementations running inside enterprise environments on corporate endpoints.

When an employee installs it to streamline workflows (or just experiment), it runs quietly under their user credentials. It connects to messaging platforms, file systems, calendars - anything the employee might have configured into it. Security teams never see or log that traffic.

In this scenario, corporate secrets, sensitive data, and internal systems could unwittingly be exposed because the bot, driven by generative AI, can be tricked into acting on behalf of attackers if misconfigured, manipulated, or turned into a vector for social engineering. Zenity has multiple blog posts stressing this point. There is also the potential for corporate endpoints to be leveraged for malicious activities, further muddying the water of attribution, as endpoints are weaponized through their exposure with Clawdbot.

There is some security guidance emerging, even in the official documentation, but few users bother to RTFM unless they hit a wall of some sort.

Bottom Lines

For individuals, the problem is two-fold. Not only are they not as likely to have the required security knowledge in order to understand the risks and mitigate them, but they also don't have the availability of either in-house security teams or external vendors to do that for them. What begins as a harmless experimentation with a new and trending tool with a promising value could easily end poorly.

For corporations, most of the existing security controls either don't have visibility into such tools, or are not focused on them. Unable to analyze what the underlying LLM does means there is no way to predict or control what the machine does, and it only takes one malicious input, implementing an indirect prompt injection attack, in order to start causing serious damage.

Clawdbot represents an exciting direction for personal AI assistants: local, flexible, extensible, and easy to use. Individuals and corporations alike need to realize this direction can't be avoided, and should prepare themselves in order to be able to monitor and control such tools.

Reply