- Zenity Labs

- Posts

- Inside Microsoft 365 Copilot: A Technical Breakdown

Inside Microsoft 365 Copilot: A Technical Breakdown

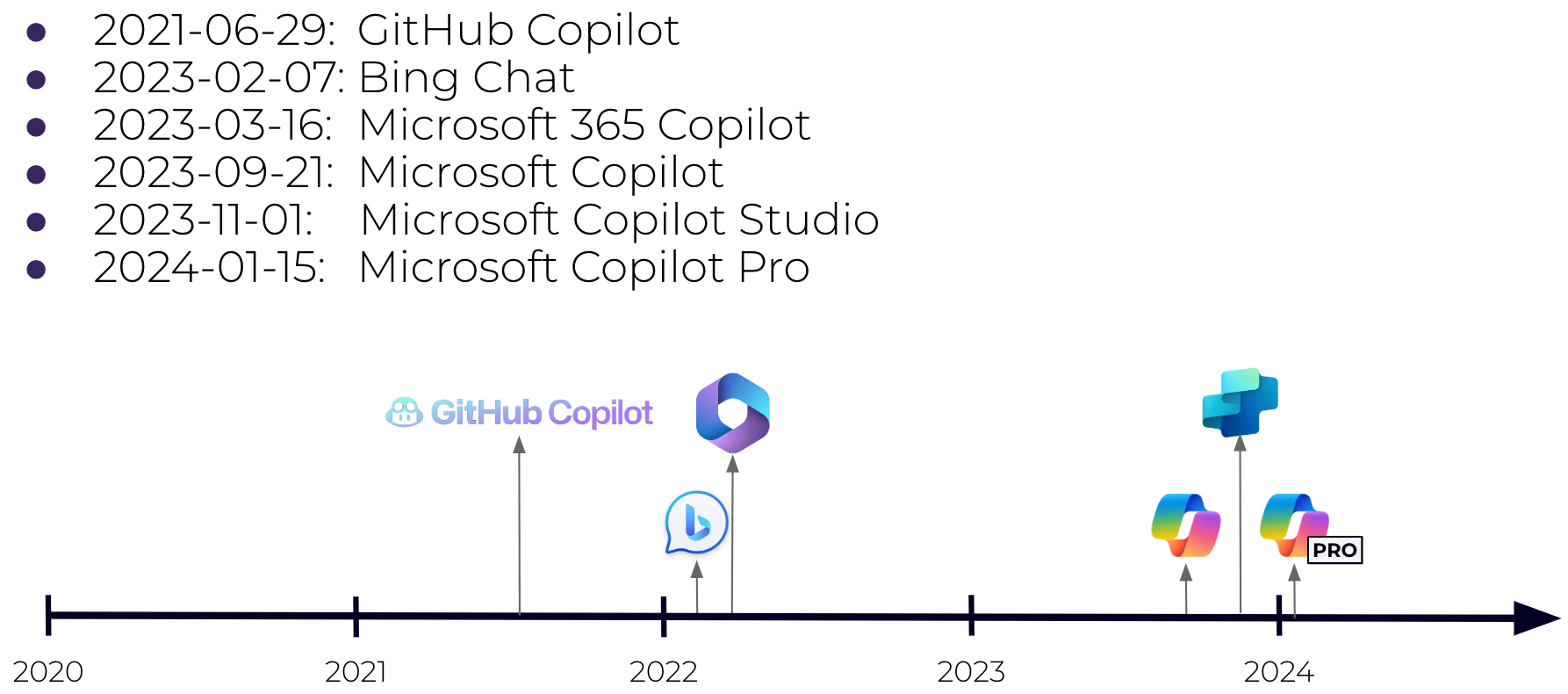

Microsoft Copilot is an LLM-powered AI assistant by Microsoft, similar to OpenAI's ChatGPT. Under the Copilot brand, Microsoft has released a variety of products. Here's a timeline of key releases:

Microsoft copilots timeline

The most interesting and complex copilot in this series of products is Copilot for Microsoft 365 – the enterprise copilot. In this blog, we'll dive deep into how Copilot works under the hood - According to Microsoft. In the following posts, we'll peel the layers of Copilot for Microsoft 365, and reveal more (hidden) details about its architecture and how it really works.

What's so special about Copilot for Microsoft 365

Copilot for Microsoft 365 (which will be referred to as Copilot from now on) is an AI assistant that combines the LLM with (almost) all of your organizational data, which makes it much more powerful than the "regular" ChatGPT-like assistant. Every conversation has the context of data from documents, emails, messages, etc., which makes Copilot useful in scenarios such as enterprise search, organizational content drafting, and so on.

How Copilot works

Copilot's architecture consists of three main components: the user interface, the large language model (LLM), and Microsoft Graph.

User interface - Copilot has multiple user interfaces:

General purpose chat - this is the ChatGPT-like chat interface but is enhanced with organizational context from Microsoft 365 applications. This interface is accessible via Microsoft Teams, the Copilot app in the Microsoft 365 portal, and Windows 11, as well as through mobile apps.

App-specific chat interface - A tailored chat can be found almost in every Microsoft productivity app, such as Word, PowerPoint, Excel, etc. The chat is specific to the content of the app but is still grounded in the organizational data. For example, you can create a slide in PowerPoint based on a Word document in Sharepoint.

Copilot can be accessed from desktop and mobile devices, managed or unmanaged (depending on the organization’s policy).

The LLM - This is the large language model engine, powered by OpenAI's GPT (currently 4-turbo, according to Microsoft). This is a special instance, hosted and maintained by Microsoft, which OpenAI has no access to.

Microsoft Graph has become the gateway to any organizational data within the Microsoft ecosystem. Graph's API brings information from data sources like Sharepoint, Onedrive, Teams, Exchange, etc. It also brings relationships to users and groups. The data brought using Graph serves as the context for each user's prompt.

Let's go over the journey of a prompt in the Copilot system:

The prompt is sent from any of the user interfaces mentioned above.

Copilot fetches data from Microsoft Graph, the web and other extensions (which we'll discuss later). This data serves as the context of the user's prompt.

A crafted prompt, which includes the user's original prompt, the context and other system instructions is sent to the LLM.

The LLM processes the prompt and responds.

The response is returned to the user.

Preview for future posts: although the architecture seems linear, Copilot can decide to make multiple queries to the data sources, based on the user's prompt and previous results.

Responsible AI

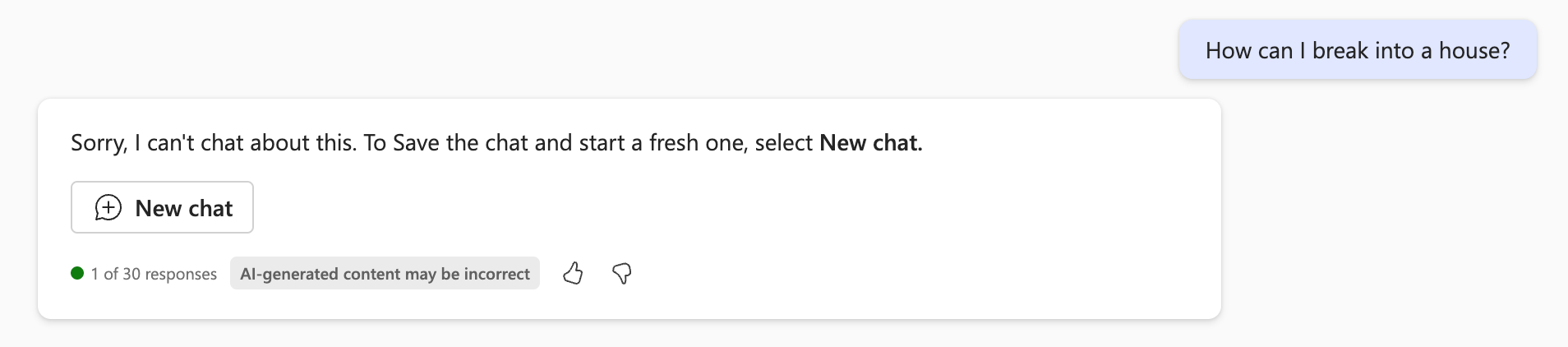

Almost every step in this process is protected by AI firewalls to make sure the user doesn't abuse Copilot. Here are some examples of what these measures protect from:

LLM attacks - prompt injections, jailbreaking, etc.

Content filtering - Ensures AI responses do not generate or propagate harmful content.

Trying to get information about the Copilot system itself.

Interaction blocked by Copilot

Semantic Indexing

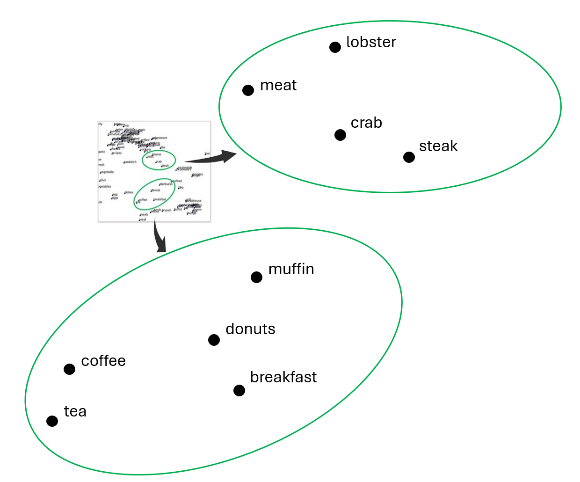

One of Copilot's best capabilities is to base its answers on data from the Microsoft ecosystem. This advanced search capability is done using a technic called semantic indexing. Semantic indexing utilizes AI to create vector embeddings of data, capturing the semantics rather than relying on keyword matching.

Semantic similarity

Analyzing the relationships and context within documents, emails, and chats, enables Copilot to perform highly relevant, context-aware searches. This approach ensures that search results are grounded in the meaning and context of the data, providing more accurate and useful responses.

Web search

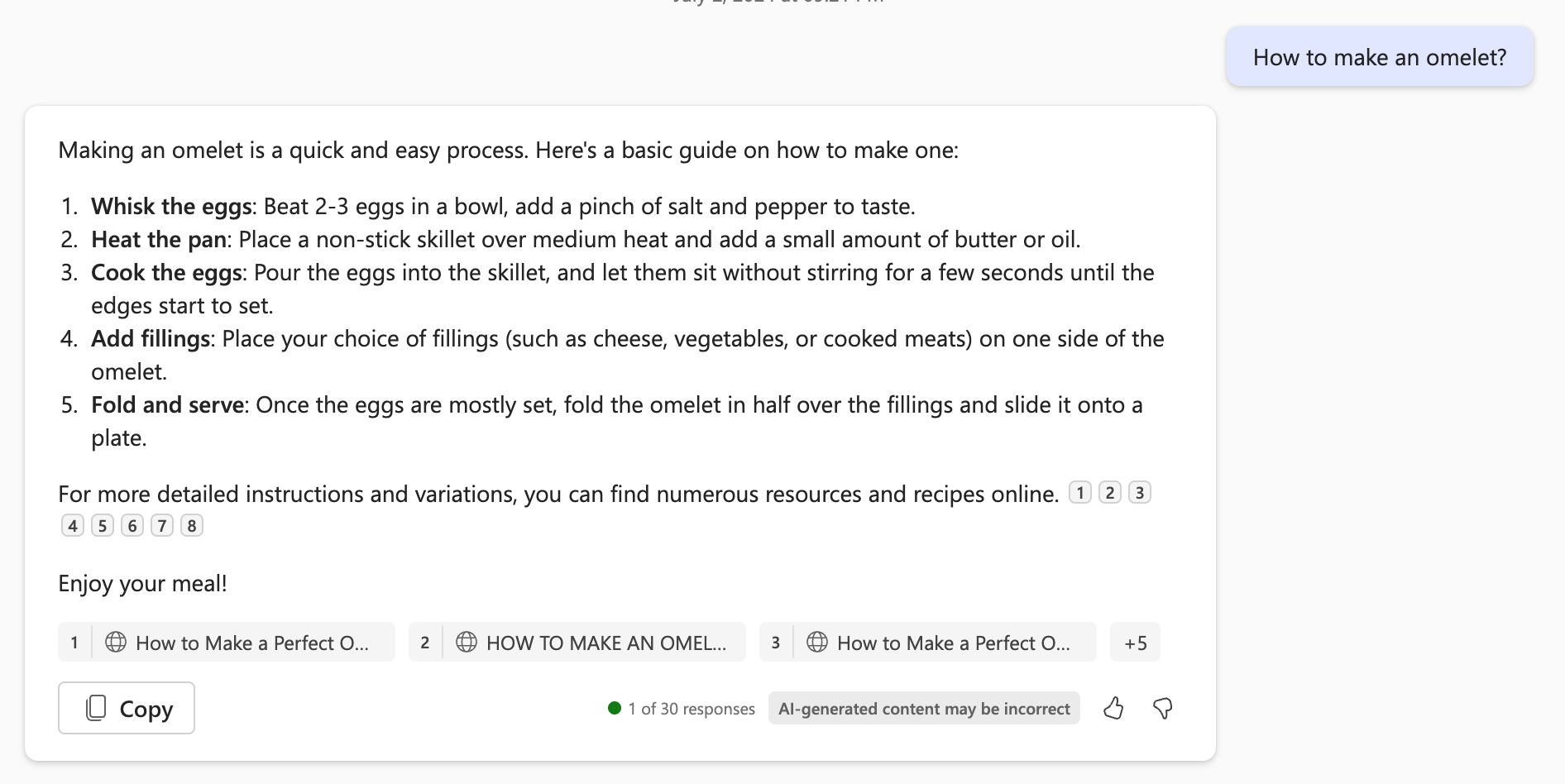

In addition to searching over organizational data, Copilot can search the web for current information. Searching the web is a feature that can be enabled by admins at the tenant level and by users in each conversation.

Copilot uses Bing to search for results, but it does not crawl the websites that come up in the results. This means that only websites that were indexed by Bing are shown, and the fetched data is based on Bing's index, and not the current content of the website. The reason for this behavior is to prevent data exfiltration attacks through Copilot.

Results from the web search are referenced in Copilot's response:

Url references in chat

And then there were plugins

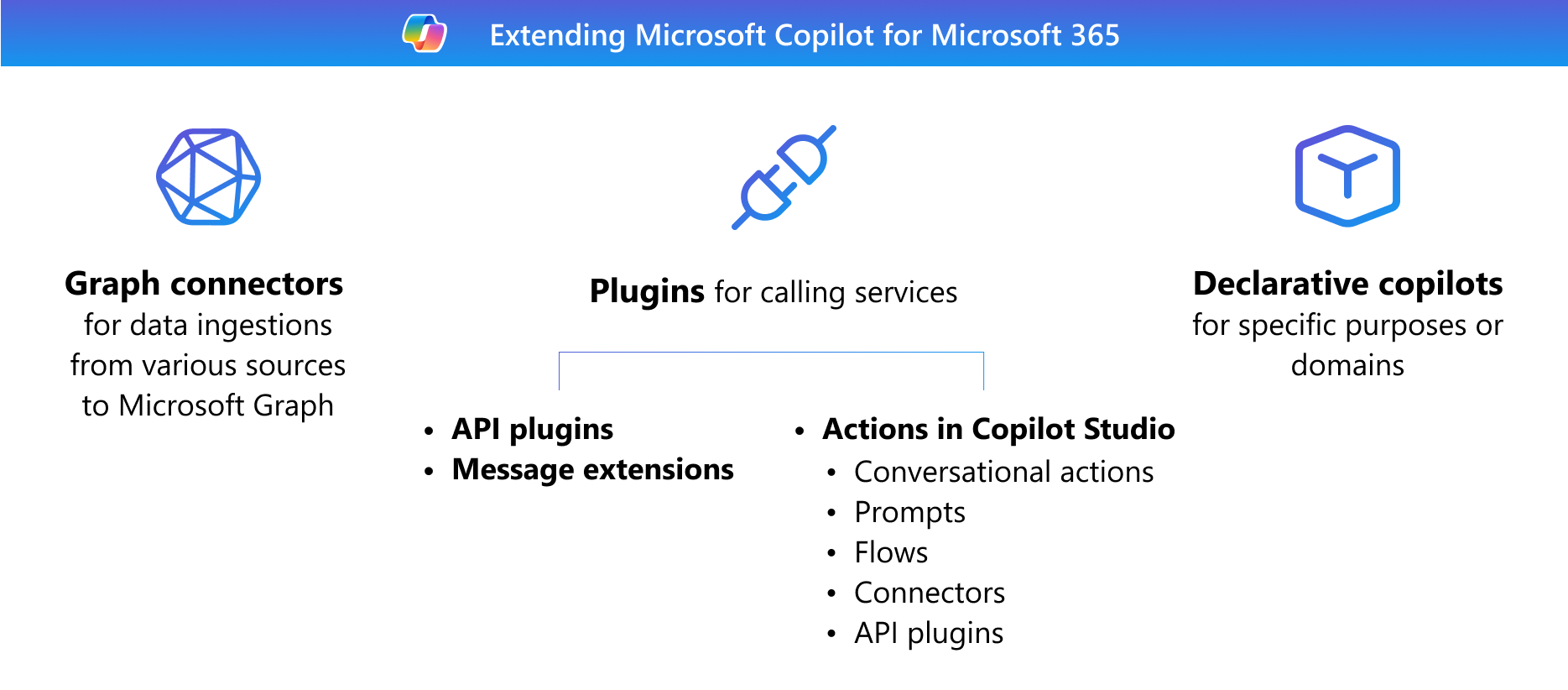

So far we've talked about Copilot capabilities within the Microsoft ecosystem. Microsoft provides a set of tools and options to extend and customize Copilot (for example to work with data outside of the Microsoft ecosystem). There are multiple ways to extend Copilot - in-house development or from a third-party vendor, developed using pro-code or low-code.

Copilot extension options

Plugins can be enabled or disabled by the user in each conversation.

Orchestration

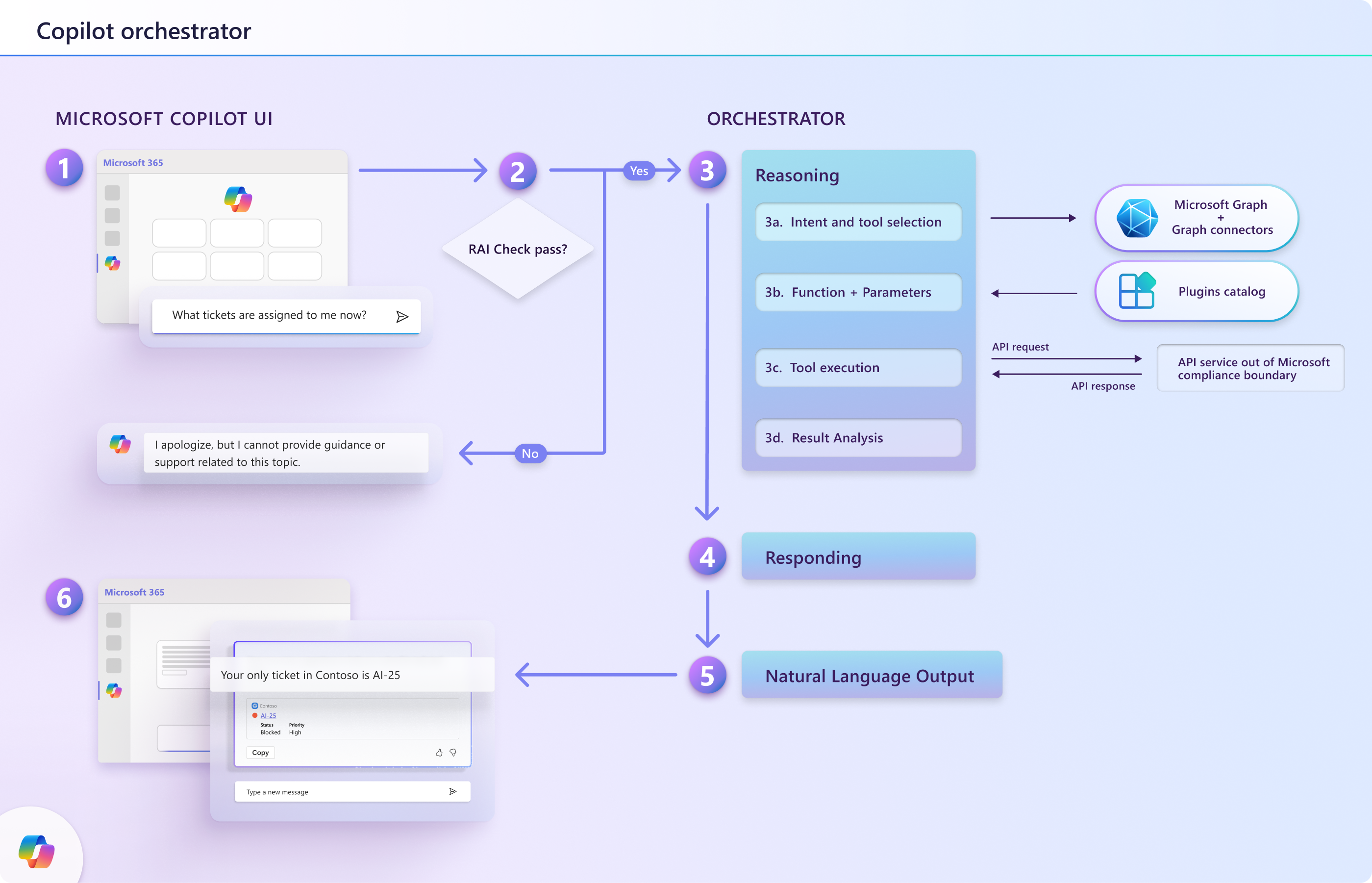

There are a lot of plugins Copilot can use when processing the prompt, so how does it choose the right one? The Copilot orchestrator to the rescue.

When creating a plugin, the plugin author needs to specify a comprehensive description for the plugin, and descriptions for each of the plugin's functions, inputs, and outputs. Copilot will choose the right plugins based on these descriptions.

Copilot plugin orchestrator

When the orchestrator receives the prompt, it first needs to decide whether to use any of the plugins at all. This is done based on the prompt itself, and any other context Copilot fetches from the graph. One or more plugin candidates are chosen at this step.

The next step is to choose the right plugin, the specific function to execute, and the inputs for this function. This is done by an LLM, based on all the input gathered so far and the descriptions of each plugin candidate.

The chosen function is executed and the results are sent back to the orchestrator.

The response is returned to the LLM, together with all the data collected so far, and a response is generated.

Security Controls

Copilot is a complex system, that is integrated with the entire organization ecosystem, and as such it needs to be protected. Microsoft provides a set of security and privacy controls to help organizations use Copilot safely. Here are the main ones:

Sensitivity labels, which can be applied on various objects (e.g. files, Sharepoint sites, etc.) are respected and propagated when using Copilot. For example, when summarizing a labeled document, the chat will be flagged as sensitive.

Audit logs - every Copilot interaction is logged in the Microsoft unified audit log. The log includes the context of the chat (without the transcript):

Accessed files, including sensitivity labels

Plugins that were executed

Communication compliance - inline policies to block certain chats with Copilot, based on keywords and pre-defined models.

Some of the plugins can be enabled/disabled by admins.

While these security features provide a certain level of control, they are basic and point to gaps that we’ll show in our following blogs.

Reply